Open-vocabulary Pose Estimation using Feature Fields

Ongoing project

We introduce a novel pose-estimation pipeline that maps natural-language prompts such as “Stand behind the chair facing the table” to one or more SE(3) robot poses in a pre-scanned scene. A NeRF-style implicit feature field distills 2-D CLIP embeddings so the robot can render language-aligned relevance maps from arbitrary viewpoints. The query is decomposed into clustered local objectives; cost programs are synthesized and optimized with CMA-ES, while a multi-modal LLM verifier supplies counter-examples and refinements. The algorithm supports pose-end goals as well as downstream-type tasks. We plan to release a comprehensive benchmark and open-source implementation.

Ticking in Tandem: Centrally Coordinated ROS System Resource-Management

Ongoing project, Talk proposal submitted to ROSCon 2025

Tandem is a centralized, intent-driven resource manager for ROS Noetic and Humble robots. It inspects the live ROS graph together with developer-provided intents to coordinate CPU, GPU, memory and I/O usage across nodes. The scheduler synthesizes low-level actions that satisfy intents such as end-to-end latency or throughput constraints while avoiding contention. The design builds on lessons from our earlier ConfigBot system. Our ROSCon talk outlines the architecture, demonstrates the workflow, and invites community feedback on intent-based resource scheduling.

Past Research

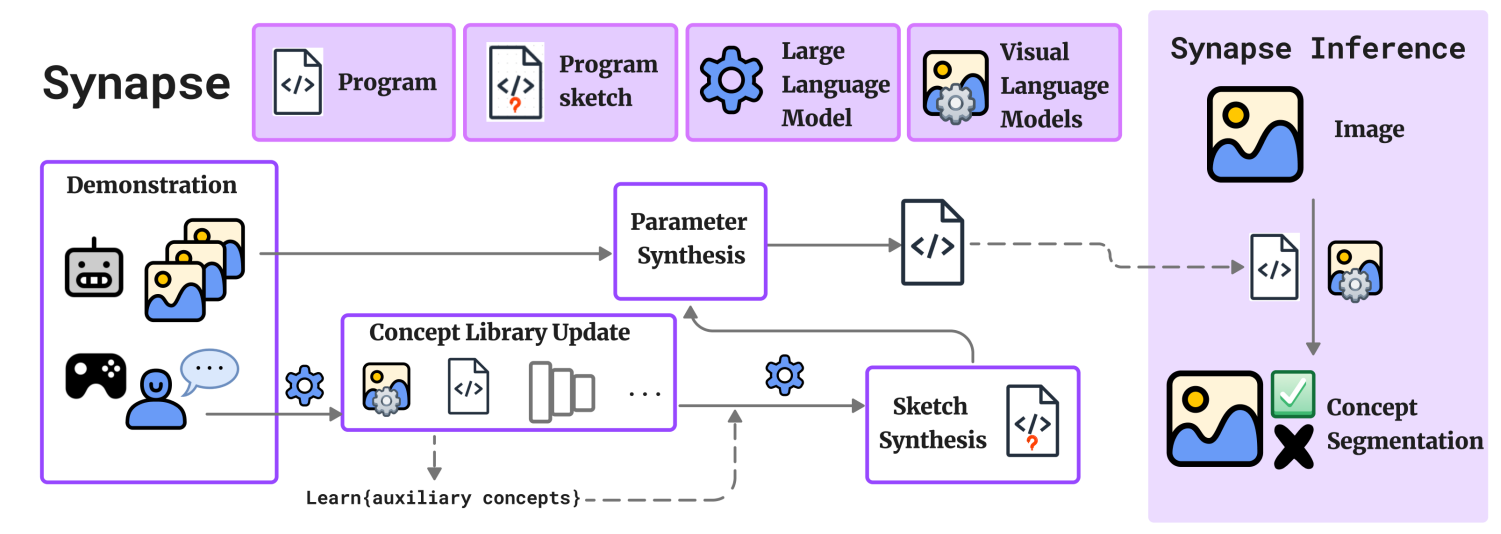

SYNAPSE: SYmbolic Neural-Aided Preference Synthesis Engine

Published at AAAI 2025 (Oral)

This paper addresses the problem of preference learning, which aims to align robot behaviors through learning user specific preferences (e.g. "good pull-over location") from visual demonstrations. Despite its similarity to learning factual concepts (e.g. "red door"), preference learning is a fundamentally harder problem due to its subjective nature and the paucity of person-specific training data. We address this problem using a novel framework called SYNAPSE, which is a neuro-symbolic approach designed to efficiently learn preferential concepts from limited data. SYNAPSE represents preferences as neuro-symbolic programs, facilitating inspection of individual parts for alignment, in a domain-specific language (DSL) that operates over images and leverages a novel combination of visual parsing, large language models, and program synthesis to learn programs representing individual preferences. We perform extensive evaluations on various preferential concepts as well as user case studies demonstrating its ability to align well with dissimilar user preferences. Our method significantly outperforms baselines, especially when it comes to out of distribution generalization. We show the importance of the design choices in the framework through multiple ablation studies.

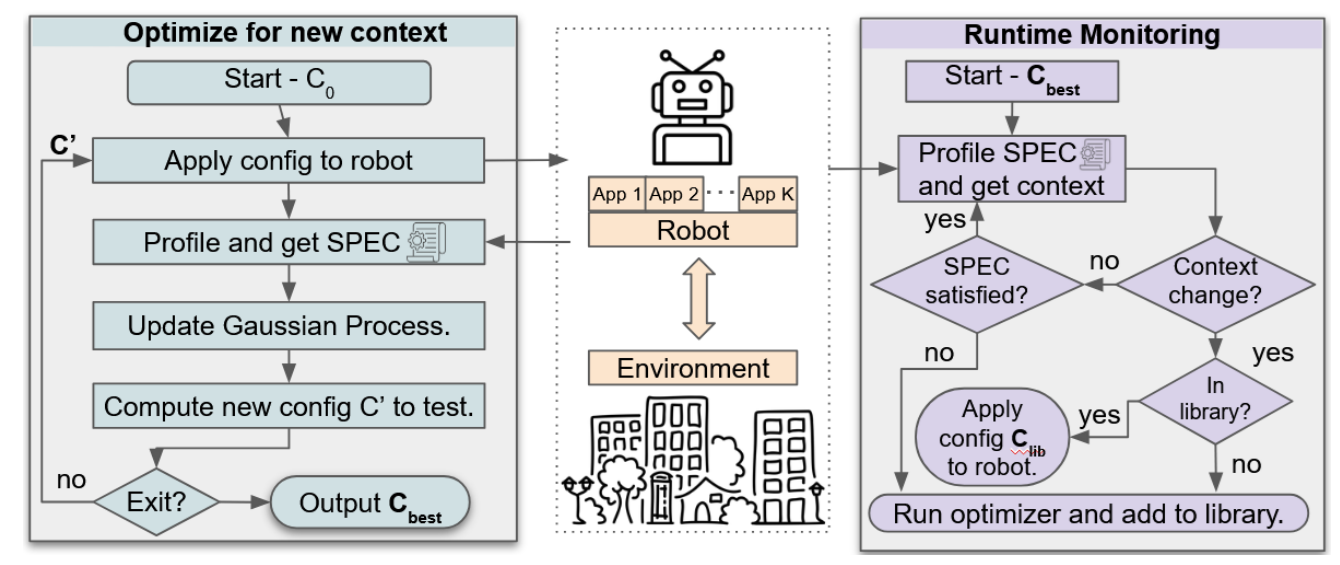

ConfigBot: Adaptive Resource Allocation for Robot Applications

Published at IROS 2025

The growing use of service robots in dynamic environments requires flexible management of on-board compute resources to optimize the performance of diverse tasks such as navigation, localization, and perception. Current robot deployments often rely on static OS configurations and system over-provisioning. However, they are suboptimal because they do not account for variations in resource usage. This results in poor system-wide behavior such as robot instability or inefficient resource use. This paper presents ConifgBot, a novel system designed to adaptively reconfigure robot applications to meet a predefined performance specification by leveraging runtime profiling and automated configuration tuning. Through experiments on multiple real robots, each running a different stack with diverse performance requirements, which could be context-dependent, we illustrate ConifgBot's efficacy in maintaining system stability and optimizing resource allocation. Our findings highlight the promise of automatic system configuration tuning for robot deployments, including adaptation to dynamic changes.

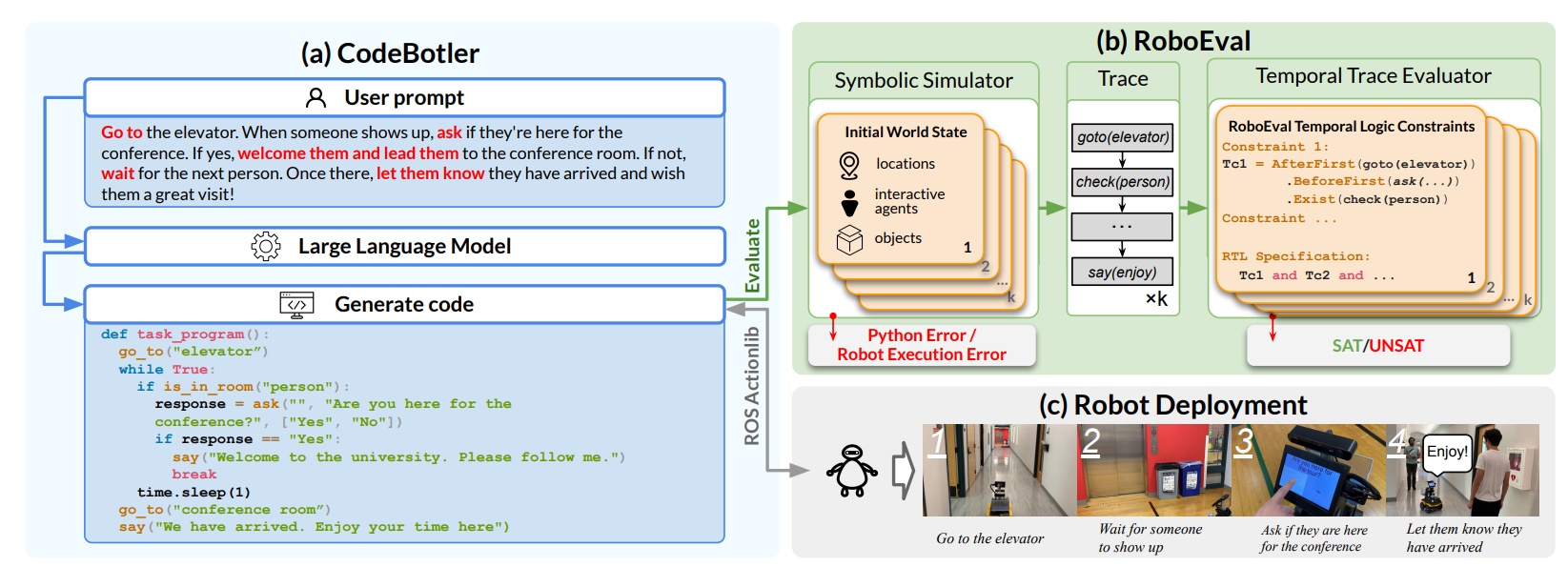

Codebotler + RoboEval: Deploying and evaluating LLMs to program service mobile robots

Published at RA-L 2024

Recent advancements in large language models (LLMs) have spurred interest in using them for generating robot programs from natural language, with promising initial results. We investigate the use of LLMs to generate programs for service mobile robots leveraging mobility, perception, and human interaction skills, and where accurate sequencing and ordering of actions is crucial for success. We contribute CodeBotler, an open-source robot-agnostic tool to program service mobile robots from natural language, and RoboEval, a benchmark for evaluating LLMs' capabilities of generating programs to complete service robot tasks. CodeBotler performs program generation via few-shot prompting of LLMs with an embedded domain-specific language (eDSL) in Python, and leverages skill abstractions to deploy generated programs on any general-purpose mobile robot. RoboEval evaluates the correctness of generated programs by checking execution traces starting with multiple initial states, and checking whether the traces satisfy temporal logic properties that encode correctness for each task. RoboEval also includes multiple prompts per task to test for the robustness of program generation. We evaluate several popular state-of-the-art LLMs with the RoboEval benchmark, and perform a thorough analysis of the modes of failures, resulting in a taxonomy that highlights common pitfalls of LLMs at generating robot programs.

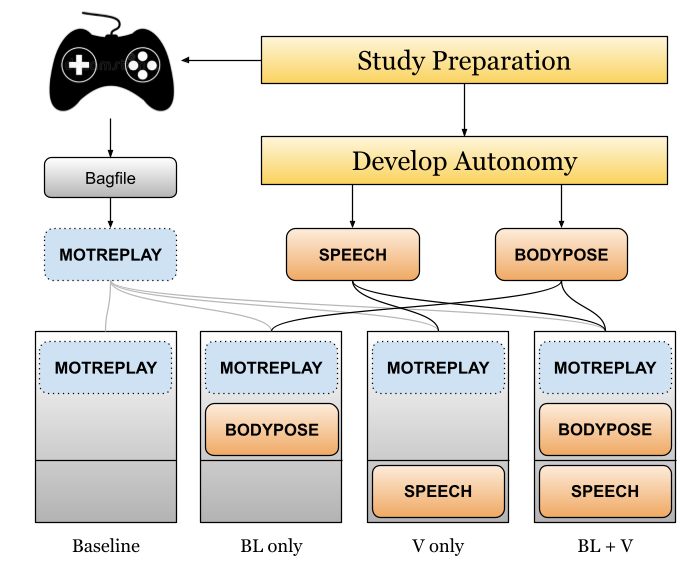

Vid2Real HRI: Align Video-based HRI Study Designs with Real-world Settings

Published at RO-MAN 2024

HRI research using autonomous robots in real-world settings can produce results with the highest ecological validity of any study modality, but many difficulties limit such studies' feasibility and effectiveness. We propose Vid2Real HRI, a research framework to maximize real-world insights offered by video-based studies. The Vid2Real HRI framework was used to design an online study using first-person videos of robots as real-world encounter surrogates. The online study (n=385) distinguished the within-subjects effects of four robot behavioral conditions on perceived social intelligence and human willingness to help the robot enter an exterior door. A real-world, between-subjects replication (n=26) using two conditions confirmed the validity of the online study's findings and the sufficiency of the participant recruitment target (22) based on a power analysis of online study results. The Vid2Real HRI framework offers HRI researchers a principled way to take advantage of the efficiency of video-based study modalities while generating directly transferable knowledge of real-world HRI.

Perceived Social Intelligence and Human Compliance in Incidental Human-Robot Encounters

Under review at Expert Systems

This study examines how socially compliant robot behaviors, operationalized as body language and verbal cues, affect human perceptions of social intelligence and compliance with a quadruped robot during incidental human-robot encounters. Extending prior work that primarily focused on direct human-robot interactions, this study addresses the understudied context of incidental encounters, which are brief, unplanned interactions between bystanders and autonomous robots in public environments. In an online video-based study with 385 participants, we found that both verbal and body language behaviors significantly improved the robot's perceived social intelligence (PSI), which in turn positively correlated with human compliance. Verbal communication alone increased compliance likelihood more than body language, and combining both yielded the highest PSI ratings and compliance scores. To validate these findings beyond controlled video scenarios, a follow-up real-world study with 26 participants replicated the results: 11 out of 13 of participants complied in the Body Language + Verbal condition versus only 1 in the baseline condition. Free-text responses revealed the importance of clearly stated intentions, politeness, and concerns about robot legitimacy and safety. Together, these findings highlight the critical role of perceived social intelligence in fostering human assistance for robots during incidental encounters and offer design strategies to support robot deployment in public environments.

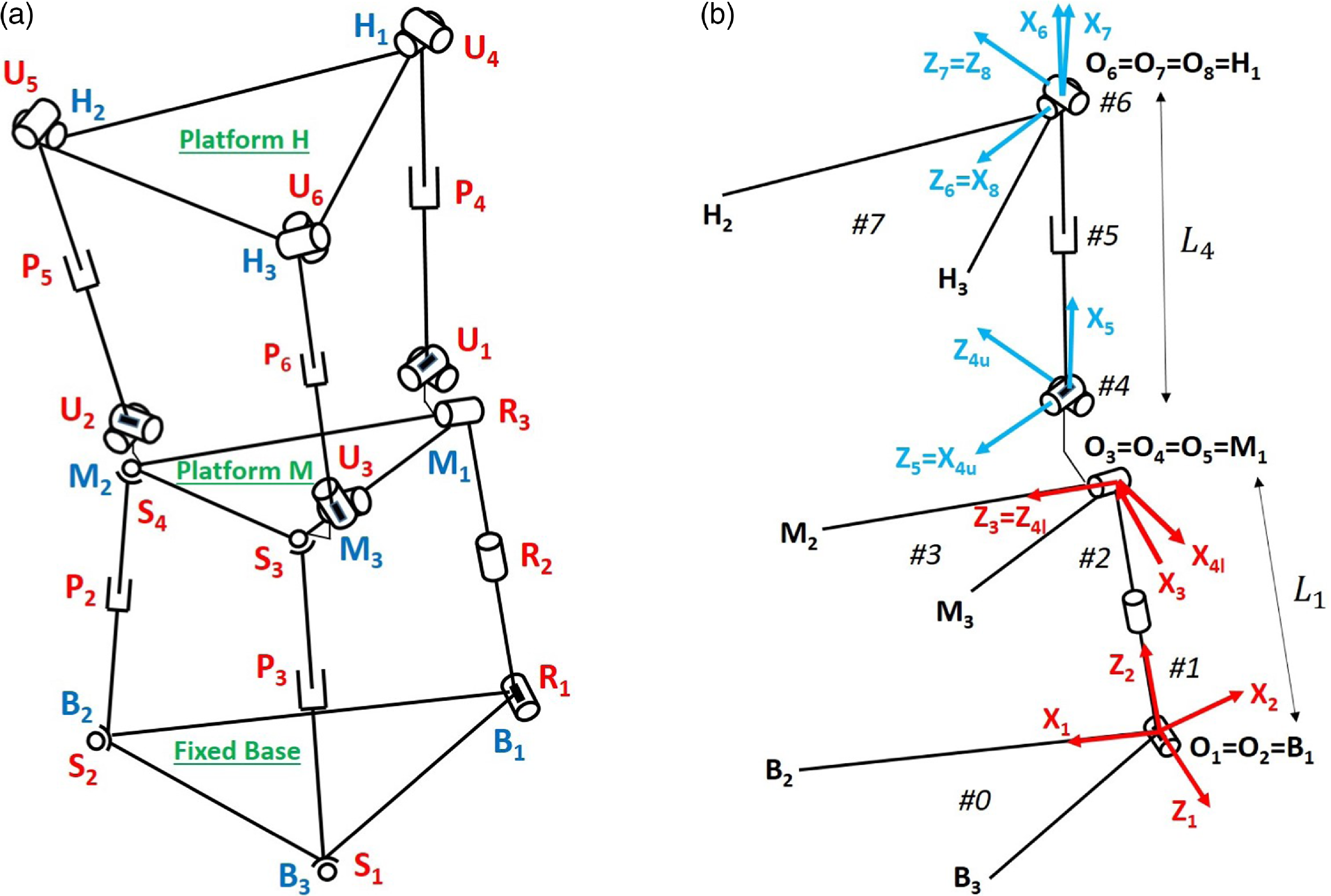

Kinematics and Singularity Analysis of a Novel Hybrid Industrial Manipulator

Published at Robotica (Cambridge University Press) 2023

This paper proposes a new type of hybrid manipulator that can be of extensive use in industries where translational motion is required while maintaining an arbitrary end-effector orientation. It consists of two serially connected parallel mechanisms, each having three degrees of freedom, of which the upper platform performs a pure translational motion with respect to the mid-platform. Closed-form forward and inverse kinematic analysis of the proposed manipulator has been carried out. It is followed by the determination of all of its singular configurations. The theoretical results have been verified numerically, and the 3D modeling and simulation of the manipulator have also been performed. A simple optimal design is presented based on optimizing the kinematic manipulability, which further demonstrates the potential of the proposed hybrid manipulator.

Workshops, LBRs, & Short Papers

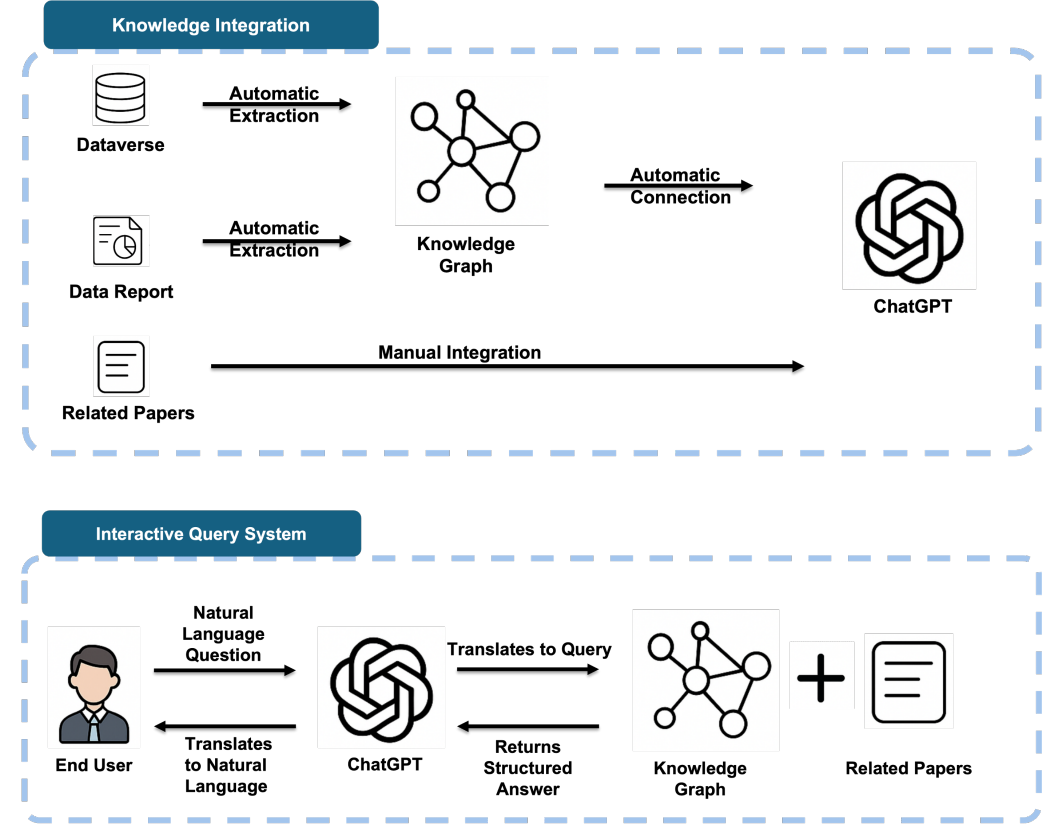

Curate, Connect, Inquire: A System for Findable Accessible Interoperable and Reusable (FAIR) Human-Robot Centered Datasets

Workshop paper at ICRA 2025 Workshop on Human-Centered Robot Learning (HCRL)

The rapid growth of AI in robotics has amplified the need for high-quality, reusable datasets, particularly in human-robot interaction (HRI) and AI-embedded robotics. While more robotics datasets are being created, the landscape of open data in the field is uneven. This is due to a lack of curation standards and consistent publication practices, which makes it difficult to discover, access, and reuse robotics data. To address these challenges, this paper presents a curation and access system with two main contributions: (1) a structured methodology to curate, publish, and integrate FAIR (Findable, Accessible, Interoperable, Reusable) human-centered robotics datasets; and (2) a ChatGPT-powered conversational interface trained with the curated datasets metadata and documentation to enable exploration, comparison robotics datasets and data retrieval using natural language. Developed based on practical experience curating datasets from robotics labs within Texas Robotics at the University of Texas at Austin, the system demonstrates the value of standardized curation and persistent publication of robotics data. The system's evaluation suggests that access and understandability of human-robotics data are significantly improved. This work directly aligns with the goals of the HCRL @ ICRA 2025 workshop and represents a step towards more human-centered access to data for embodied AI.

Shaping Perceptions of Robots With Video Vantages

Late-breaking report at HRI 2025

This study investigates the role of video vantage, “Encounterer“ and “Observer”, in shaping perceptions of robot social intelligence. Using videos depicting robots navigating hall-ways and employing gaze cues, results revealed that the Observer vantage consistently yielded higher ratings for perceived social intelligence compared to the Encounterer vantage. These findings underscore the impact of vantage on interpreting robot behaviors and highlight the need for careful design of video-based HRI studies to ensure accurate and generalizable insights for real-world applications.

Multi-Factor Visual SLAM Failures in Mobile Service Robot Deployments

Spotlight Talk at RSS 2023 Workshop on Towards Safe Autonomy: New Challenges and Trends in Robot Perception

Miscellaneous

ICRA 2023 BARN Challenge on Autonomous Navigation

Participated in the BARN (Benchmark Autonomous Robot Navigation) Challenge, developing and testing autonomous navigation algorithms for mobile robots in complex, constrained environments.

Amazon Lab126 Astro SDK Workshop (Invite-Only)

Internal workshop at Amazon Lab126 to explore and develop applications using the Amazon Astro home robot SDK preview.